Pytorch Matrix Vector Multiplication

If the first argument is 2-dimensional and the second argument is 1-dimensional the matrix-vector product is returned. It takes as an input in_features values and produces out_features values.

For matrix multiplication of m1 and m2 eg m1 x m2 we need to make sure W1 H2 and the size of the result will be H1 x W2.

Pytorch matrix vector multiplication. Randn 2 3 vec torch. In PyTorch unlike numpy 1D Tensors are not interchangeable with 1xN or Nx1 tensors. The GCS format is special in that it drops down to the usual CSR format for 2D tensors and supports multi-dimensional data using the same data structures used by CSR but with a slightly augmented indexing scheme.

Models Beta Discover publish and reuse pre-trained models. The MlDL matrix is very important because with matrix data handling and representation are very easy so Pytorch provides a tensor for handling matrix or higher dimensional matrix as I discussed above. We need to learn how to use matrixvector and calculate them in python.

If the first argument is 1-dimensional and the second argument is 2-dimensional a 1 is prepended to its dimension for the purpose of the matrix multiply. Learn about PyTorchs features and capabilities. It can be implemented efficiently supports sparse inputs and provides good capacity.

For matrix multiplication in PyTorch use torchmm. A linear fully connected layer is just a simple matrix multiplication. In the matrix each element is denoted by a variable with two subscripts like a 21 that means second row and first column.

Randn 2 mat torch. Numpys npdot in contrast is more flexible. While ready to be reviewed the architecture and naming scheme is.

The size of m1 is H1 x W1 and the size of m2 is H2 x W2. Introduction This PR introduces the GCS format for efficient storage and operations on multi-dimensional tensors. Of arithmetic operations CLRS_.

Find resources and get questions answered. Join the PyTorch developer community to contribute learn and get your questions answered. Matrix multiplication between a vector-wise sparse matrix A and a dense matrix B.

Note that since is a function to compute the product mathN needs to be greater than or equal to 2. A sparse matrix has a lot of zeroes in it so can be stored and operated on in ways different from a regular dense matrix. Vector operations are of different types such as mathematical operation dot product and linspace.

In part 1 I analyzed the execution times for sparse matrix multiplication in Pytorch on a CPUHeres a quick recap. The operation is y Axb where. The above computes C A B A is uint8 and B is int8 and C is int32 result.

This is a huge improvement on PyTorch sparse matrices. Def f x1 x2. Randn 3 r torch.

However applications can still compute this using the. Sadayappan Department of Computer Science and Engineering Ohio State University Columbus OH 43210 yangxin srini sadaycseohiostateedu ABSTRACT Scaling up the sparse matrix-vector multiplication kernel. One of the ways to easily compute the product of two matrices is to use methods provided by PyTorch.

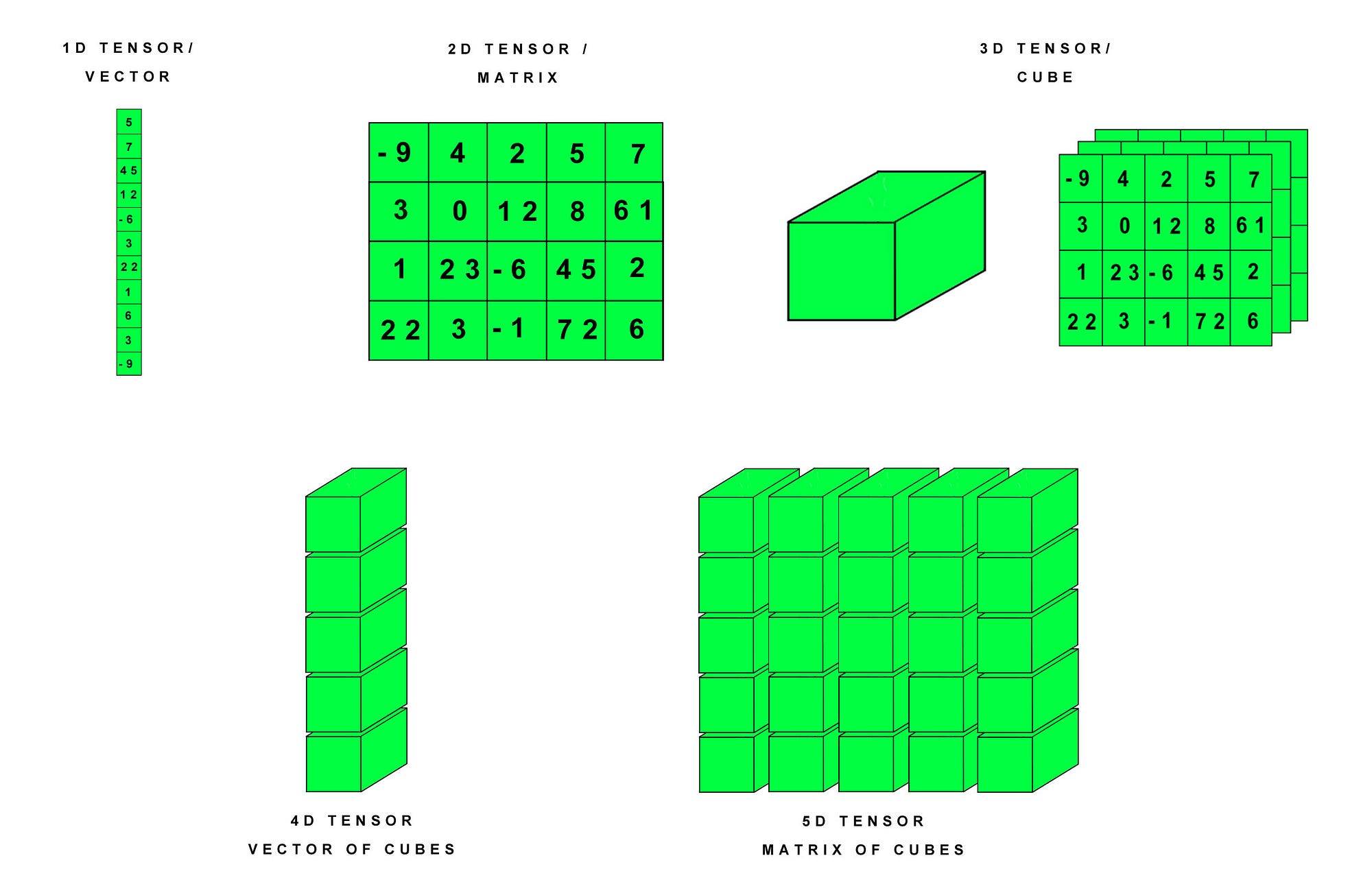

Vectors are a one-dimensional tensor which is used to manipulate the data. B torchrand 41 then I will have a column vector and matrix multiplication with mm will work as expected. Torchaddmm torchaddmminput mat1 mat2 beta1 alpha1 outNone Tensor Performs a matrix multiplication of the matrices mat1 and mat2.

Fast Sparse MatrixVector Multiplication on GPUs. Pytorch is a Python library for deep learning which is fairly easy to use yet gives the user a lot of control. A x1 x2 y1 log a y2 sin x2 return y1 y2 def g y1 y2.

X - the input column vector of size in_features. Implications for Graph Mining Xintian Yang Srinivasan Parthasarathy P. After the matrix multiply the prepended dimension is removed.

In this article we are going to discuss vector operations in PyTorch. You can convert C to float by multiplying A_scale B_scaleC_Scale C C_zero_point. If equal to 2 then a trivial matrix-matrix product is returned.

Python Matrix multiplication using Pytorch. Matrix vector products Matrix vector products Matrix X vector Size 2x4 mat torch. And finally the downstream gradient tensors will be computed using the matrix-vector multiplication of the local Jacobian matrices with the upstream gradient tensors.

Using the matrix chain order algorithm which selects the order in which incurs the lowest cost in terms. PyTorch-Geometric PyG Fey. Their current implementation is an order of magnitude slower than the dense one.

Currently PyTorch does not support matrix multiplication with the layout signature M strided M sparse_coo. Like m2 x m1 we need to. Now if we derive this by hand using the chain rule and the definition of the derivatives we obtain the following set of identities that we can directly plug into the Jacobian matrix of.

A place to discuss PyTorch code issues install research. It becomes complicated when the size of the matrix is huge. Being a general formula this multiplication process involves flattening of the high-level tensors of the output as well as the input into a giant vector to finally achieve the.

The matrix input is added to the final result. Return y1 y2. The matrix multiplication is an integral part of scientific computing.

Mv mat vec Matrix Matrix X vector Size 2 M torch. Randn 2 4 vec torch. B torchrand 4 with.

It computes the inner product for 1D arrays and performs matrix multiplication for 2D arrays. By popular demand the function torchmatmul performs matrix multiplications if both arguments are 2D and computes their dot product if both arguments are 1D. Randn 4 r torch.

PyTorch is an optimized tensor library majorly used for Deep Learning applications using GPUs and CPUs.

Linear Algebra Operations With Pytorch Dev Community

How To Increase The Dimensionality Of Pytorch How To Use Pytorch To Compress Tensor Dimensions And Expand Tensor Dimensions Programmer Sought

How To Perform Sum Of Squares Of Matrices In Pytorch Pytorch Forums

Quantized Matrix Multiplication Question Issue 328 Pytorch Fbgemm Github

Pytorch Torch Docs Py At Master Pytorch Pytorch Github

Introduction To Tensordot And Einsum Functions In Pytorch Programmer Sought

Pytorch Matrix Multiplication Matmul Mm Programmer Sought

Pytorch Matrix Multiplication Matmul Mm Programmer Sought

Pytorch Matrix Multiplication Matmul Mm Programmer Sought

Pytorch Matrix Multiplication Matmul Mm Programmer Sought

Fixes For Pytorch 1 6 And Cuda 11 By Half Potato Pull Request 71 Charlesshang Dcnv2 Github

Pytorch Matrix Multiplication Matmul Mm Programmer Sought

Pytorch Batch Matrix Operation Pytorch Forums

008 Linear Algebra Eigenvectors And Eigenvalues

Pytorch Matrix Multiplication Matmul Mm Programmer Sought

Investigating Tensors With Pytorch Datacamp

Tensors With Pytorch Rajanchoudhary Com

Investigating Tensors With Pytorch Datacamp