Tensorflow Matrix Multiplication Batch

Is there anyway to speed up the batch matrix-matrix product computation. Julia uses for matrix multiplication and for element-wise multiplication.

How Does Tensorflow Batch Matmul Work Stack Overflow

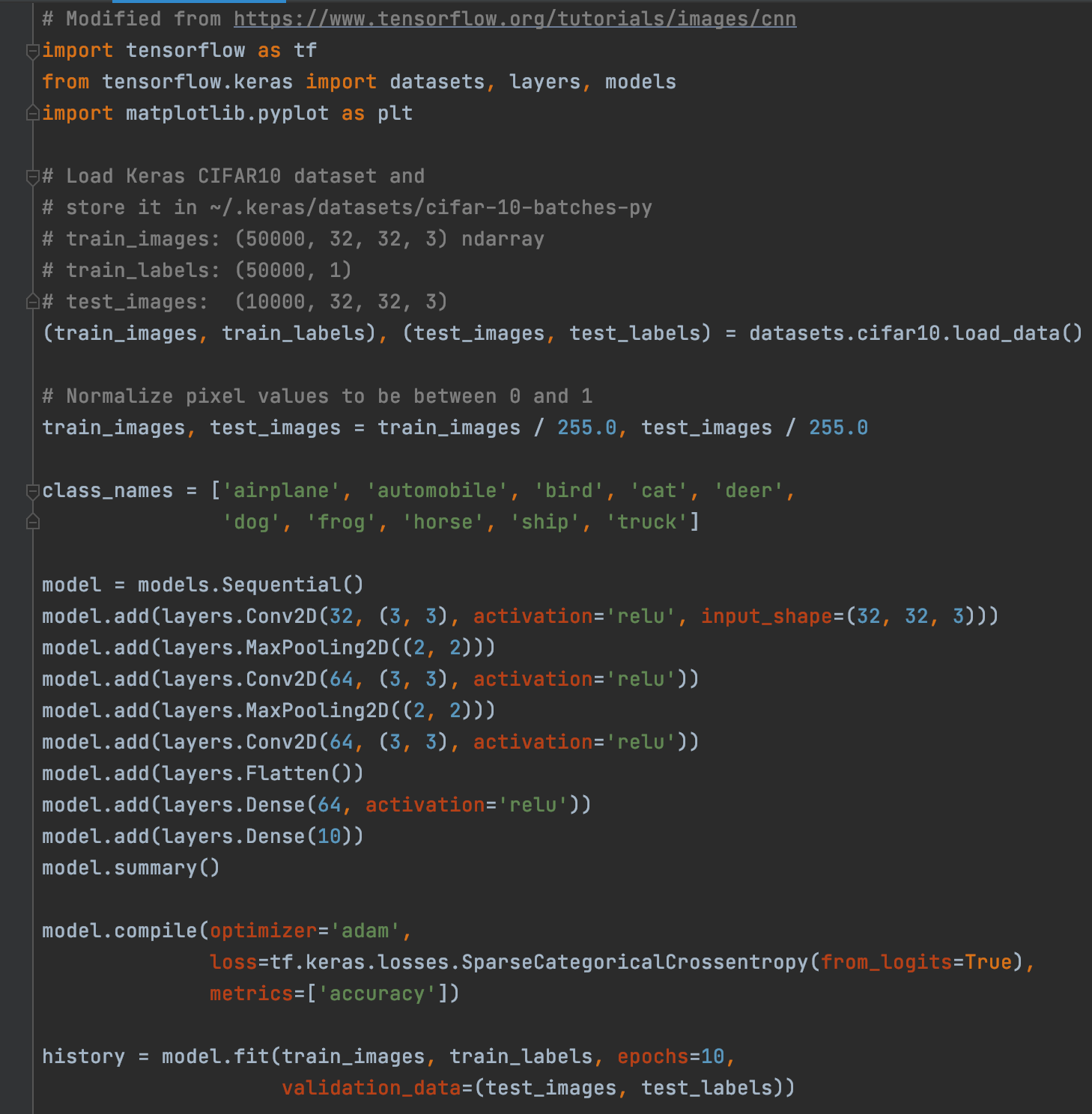

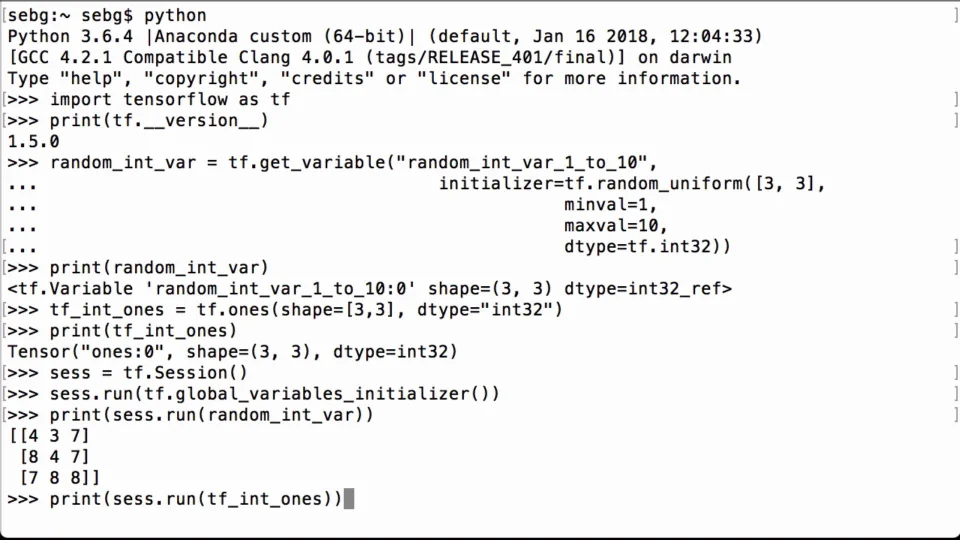

X nparange 12astype npint32reshape 232 y nparange 6astype npint32reshape 23 X tfconstant x Y tfconstant y with tfSession as sess.

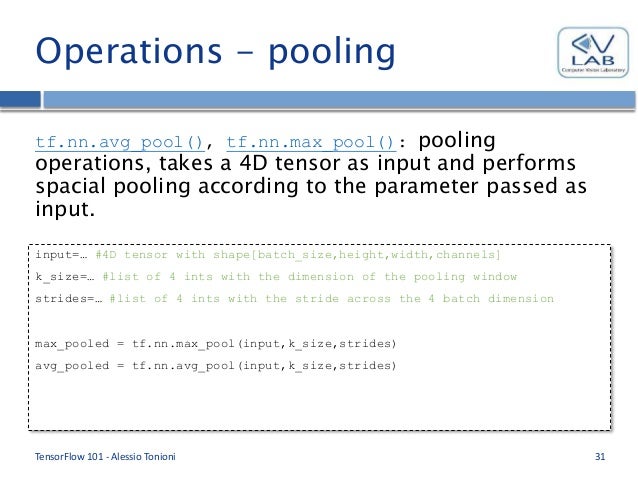

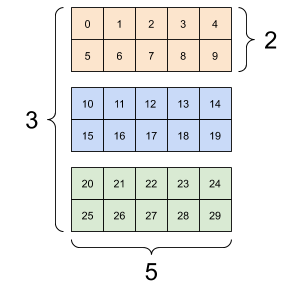

Tensorflow matrix multiplication batch. So you can see now why we use the ones. A 2x3 matrix a tfconstant nparray 1 2 3 102030 dtypetffloat32 Another 2x3 matrix b. The inputs must following any transpositions be tensors of rank 2 where the inner 2 dimensions specify valid matrix multiplication dimensions and any further outer dimensions specify matching batch size.

Tftranspose a permNone conjugateFalse nametranspose Used in the notebooks Permutes the. The inputs must following any transpositions be tensors of rank 2 where the inner 2 dimensions specify valid matrix multiplication dimensions and any further outer dimensions specify matching batch size. So 4x1 3x1 7x1.

Float16 float32 float64 int32 complex64 complex128. Simple Batch Matrix Multiplication. On the other hand if efficiency is important it may be better to reshape embed to be a 2D tensor so the multiplication can be done with a single matmul like this.

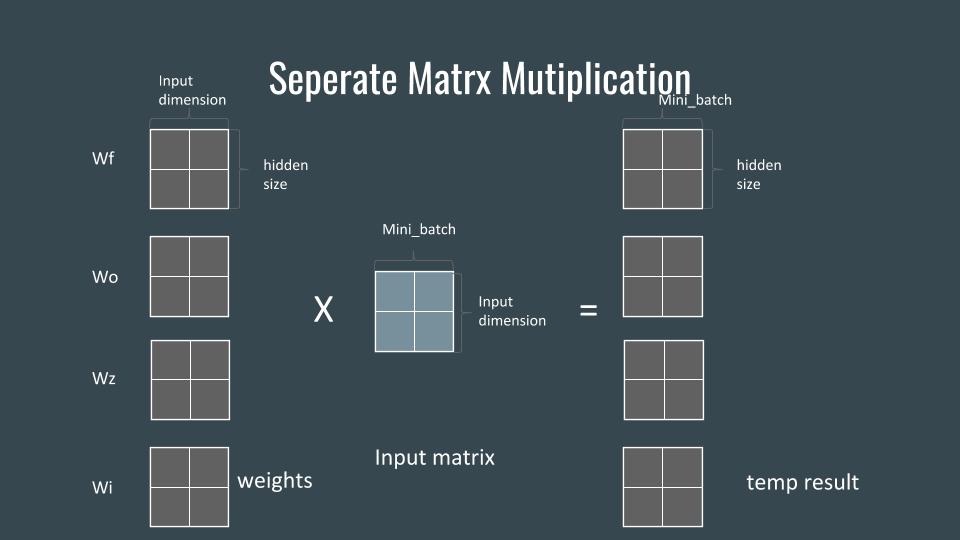

Understand batch matrix multiplication Matrix multiplication when tensors are matrices. Instead all args were concatenated and the weights were chosen to have rank 2 ie. To perform elementwise multiplication on tensors you can use either of the following.

Each of the individual slices can optionally be adjointed to adjoint a matrix means to transpose and conjugate it before multiplication by setting the adj_x or adj_y flag to True which are by default False. Printsessruntf_matrix_multiplication_prod We see 14 14 14. Y_masked tfmultiplytfexpand_dimsselfmask 2 selfy inputs tfconcaty_masked selfu tfexpand_dimsselfmask 2 axis2 y_prev tfexpand_dimsselfy_0 0 1 dim_y y_prev tftiley_prev tfshapeselfmu0 1 alpha state u buffer selfalphay_prev selfstate selfu 0 init_bufferTrue reuse reuse dummy matrix to initialize B and C in scan dummy_init_A.

Def evaluatemodel dataset batch_size1000. X concatargs axis1 xshape batch_size total_arg_size w Variable wshape total_arg_size output_size y matmulx w yshape batch_size output_size Now the main problem here is that w is not block-diagonal which means that it contains more entries than it should. Both matrices must be of the same type.

Note that this behavior is specific to Keras. My_output tfmatmul x_data A. Tfmatmul or Kbatch_dot There is another operator Kbatch_dot that works the same as tfmatmul from keras import backend as K a K.

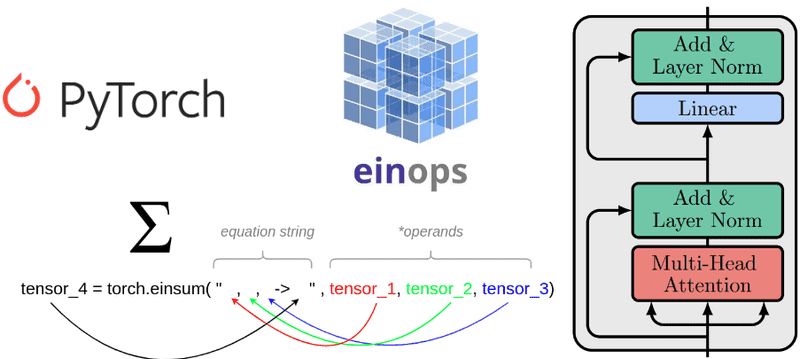

Tftranspose To take the transpose of the matrices in dimension-0 such as when you are transposing matrices where 0 is the batch dimesnion you would set perm 021. Print sessrun tfeinsum ijkkl-ijl X Y 3 4 5 9 14 19 15 24 33 21 34 47 27 44 61. Batch Matrix Multiplication.

A 2x3 matrix a tfconstant nparray 1 2 3 102030 dtypetffloat32 Another 2x3 matrix. TensorFlow optimizations are enabled via oneDNN to accelerate key performance-intensive operations such as convolution matrix multiplication and. Of 7 runs 10000 loops each.

So 43 7 7 14. Remember that matrix multiplication is not communicative so we have to enter the matrices in the correct order in the matmul function. Multiply the last dimension of X and first dimension of Y and sum it over.

Batch_dota b print c. Datasetshuffle ps ys for i in rangedatasetn batch_size. We can just do the addition of this.

Ty psappendp ysappendty ps npconcatenatepsravel ys npconcatenateysravel return roc_auc_scoreys ps. Tfmultiply a b Here is a full example of elementwise multiplication using both methods. X 1 2 Y 3 4 5 6 einsumab-ba X 12 transpose einsumab-a X 3 sum over last dimension einsumab- X 3 sum over both dimensions einsumabbc-ac X Y 1316 matrix multiply einsumabbc-abc X Y 341012 multiply and broadcast.

So this was our first matrix and we are multiplying it times the second matrix. Ones 9 8 7 2 5 c K. Tfmultiply a b Here is a full example of elementwise multiplication using both methods.

The supported types are. Multiplies all slices of Tensor x and y each slice can be viewed as an element of a batch and arranges the individual results in a single output tensor of the same batch size. Ones 9 8 7 4 2 b K.

Tx ty datasetnext_batchbatch_size p sessrunmodelp modelx. Not very easy to visualize when ranks of tensors are above 2. Embed tfreshapeembed -1 m h tfmatmulembed U h tfreshapeh -1 n c where c is the number of columns in U.

All right so lets do the visual inspection of results. So here the multiplication has. Import tensorflow as tf import numpy as np Build a graph graph tfGraph with graphas_default.

Also normal matrix multiplication seems to be slightly slower than Pytorch sentence tfconstanttfrandomnormal10240 32 w tfconstanttfrandomnormal32 1 timeit tfmatmulsentence w 426 µs 807 ns per loop mean std. Import tensorflow as tf import numpy as np Build a graph graph tfGraph with graphas_default. You can use tfeinsum with equation ijkkl-ijl ie.

The reduction index for the loss term is 1 in the Python version but the Julia API assumes 1-based indexing to be consistent with the rest of Julia and so 2 is used. To perform elementwise multiplication on tensors you can use either of the following.

High Performance Inference With Tensorrt Integration The Tensorflow Blog

Tf Matmul Multiply Two Matricies Using Tensorflow Matmul Tensorflow Tutorial

What Is A Batch In Tensorflow Stack Overflow

A Hands On Primer To Tensorflow Analytics India Magazine

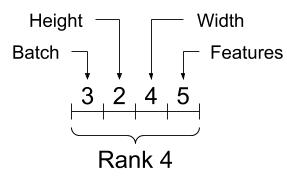

Introduction To Tensors Tensorflow Core

Nlp Deep Learning Libraries For Deep Learning Matrix

Introduction To Tensors Tensorflow Core

Tensorflow Keras Early Versions Of Tensorflow Have Major By Jonathan Hui Medium

Tf Matmul Multiply Two Matricies Using Tensorflow Matmul Tensorflow Tutorial

Dimensions Must Match Error In Tflite Conversion With Toco Stack Overflow

Introduction To Tensors Tensorflow Core

How Does Tensorflow Batch Matmul Work Stack Overflow

Understanding The Tensorflow Mnist Tutorial Is The Input X A Column Matrix Or An Array Of Column Matrices Stack Overflow

Understanding Einsum For Deep Learning Implement A Transformer With Multi Head Self Attention From Scratch Ai Summer

Tutorial Convolutional Neural Networks With Tensorflow Datacamp

3d 2d Matrix Multiplication Without Changing Batch Dimension Issue 24252 Tensorflow Tensorflow Github

Lstm Scheduler By Jiangyifangh

What Is A Batch In Tensorflow Stack Overflow